CI/CD Side By Side: Jenkins and JFrog Pipelines

Have you wanted to explore JFrog Pipelines for DevOps pipeline automation but just haven’t been able to get started? To learn something new, it can help to start with what you already know well.

Whether you’ve dabbled in CI/CD or are a veteran, you’re likely to have some working knowledge of Jenkins. For 10 years, the open source automation server has led the field and accumulated an ecosystem of some 1,600 plugins — including the Jenkins plugin for Artifactory — that help support continuous integration and continuous delivery.

JFrog Pipelines is the CI/CD automation component of the JFrog DevOps Platform, a complete system for end-to-end production and delivery of binaries. As a modern, fully cloud-native CI/CD solution that’s naturally integrated with Artifactory’s binary repository management, Pipelines can provide a centralized automation service that scales for any need from small startup to global enterprise.

But what are the real differences between the two? Let’s take a look at how you might code the same basic pipeline to build and deploy an application, first in Jenkins, and then in the JFrog Pipelines DSL. Both examples will use Artifactory in a JFrog Platform instance named Kaloula.

FIrst Look: The Jenkins Version

Our Jenkins version of the pipeline runs on a single agent on a virtual machine, and performs a sequence of 9 actions (stages):

| Stage | Description |

| Clone | Clones the source repo for the duration of the pipeline execution. |

| Artifactory Configuration | Configures the Jenkins Artifactory Plugin for this pipeline |

| Build Maven Project | Builds the maven project according to pom.xml |

| Build Docker Image | Builds and tags the Docker image using the Docker CLI |

| Push Image to Artifactory | Pushes the completed Docker image to a Docker registry in Artifactory |

| Publish Build Info | Publishes the build-info for the completed Docker image to Artifactory |

| Install Helm | Install Helm 3 into the agent environment |

| Configure Helm & Artifactory | Configures the Helm client to use the Helm repository in Artifactory |

| Deploy Chart | Deploy the application using the Helm chart |

Our Jenkins pipelines is in Apache Groovy, a Java-syntax-compatible object-oriented programming language for the Java platform. Since you’re already well familiar with Jenkins, this code should look very familiar to you and need little explanation.

To run the Groovy code for this Jenkins pipeline, an administrator will have to install additional plugins:

- Jenkins plugin for Artifactory to help build our Maven project and push to our binary repositories

- Credentials binding plugin for secrets management

pipeline {

agent any

stages {

stage ('Clone') {

steps {

git branch: 'master', url: "https://github.com/talitz/spring-petclinic-ci-cd-k8s-example.git"

}

}

stage ('Artifactory Configuration') {

steps {

rtServer (

id: "kaloula-artifactory",

url: "https://kaloula.jfrog.io/artifactory",

credentialsId: "admin.jfrog"

)

rtMavenResolver (

id: 'maven-resolver',

serverId: 'kaloula-artifactory',

releaseRepo: 'libs-release',

snapshotRepo: 'libs-snapshot'

)

rtMavenDeployer (

id: 'maven-deployer',

serverId: kaloula-artifactory,

releaseRepo: 'libs-release-local',

snapshotRepo: 'libs-snapshot-local',

threads: 6,

properties: ['BinaryPurpose=Technical-BlogPost', 'Team=DevOps-Acceleration']

)

}

}

stage('Build Maven Project') {

steps {

rtMavenRun (

tool: 'Maven 3.3.9',

pom: 'pom.xml',

goals: '-U clean install',

deployerId: "maven-deployer",

resolverId: "maven-resolver"

)

}

}

stage ('Build Docker Image') {

steps {

script {

docker.build("kaloula-docker.jfrog.io/" + "pet-clinic:1.0.${env.BUILD_NUMBER}")

}

}

}

stage ('Push Image to Artifactory') {

steps {

rtDockerPush(

serverId: "kaloula-artifactory",

image: "kaloula-docker.jfrog.io/" + "pet-clinic:1.0.${env.BUILD_NUMBER}",

targetRepo: 'docker',

properties: 'project-name=jfrog-blog-post;status=stable'

)

}

}

stage ('Publish Build Info') {

steps {

rtPublishBuildInfo (

serverId: "kaloula-artifactory"

)

}

}

stage('Install Helm') {

steps {

sh """

curl -fsSL -o get_helm.sh https://raw.githubusercontent.com/helm/helm/master/scripts/get-helm-3

chmod 700 get_helm.sh && helm version

"""

}

}

stage('Configure Helm & Artifactory') {

steps {

withCredentials([[$class: 'UsernamePasswordMultiBinding', credentialsId: 'admin.jfrog', usernameVariable: 'USERNAME', passwordVariable: 'PASSWORD']]) {

sh """

helm repo add helm https://kaloula.jfrog.io/artifactory/helm --username ${env.USERNAME} --password ${env.PASSWORD}

helm repo update

"""

}

}

}

stage('Deploy Chart') {

steps {

withCredentials([kubeconfigContent(credentialsId: 'k8s-cluster-kubeconfig', variable: 'KUBECONFIG_CONTENT')]) {

sh """

echo "$KUBECONFIG_CONTENT" > config && cp config ~/.kube/config

helm upgrade --install spring-petclinic-ci-cd-k8s-example helm/spring-petclinic-ci-cd-k8s-chart --kube-context=gke_dev_us-west1-a_artifactory --set=image.tag=1.0.${env.BUILD_NUMBER}

"""

}

}

}

}

}

Second Look: The Pipelines Version

JFrog Pipelines uses a DSL based on YAML, the language of code native. Like Kuberenetes configurations and Helm charts, this data serialization language of key-value pairs defines the operational parameters of the work to be done. (Jenkins also has a YAML plugin in incubation; Pipelines uses YAML natively).

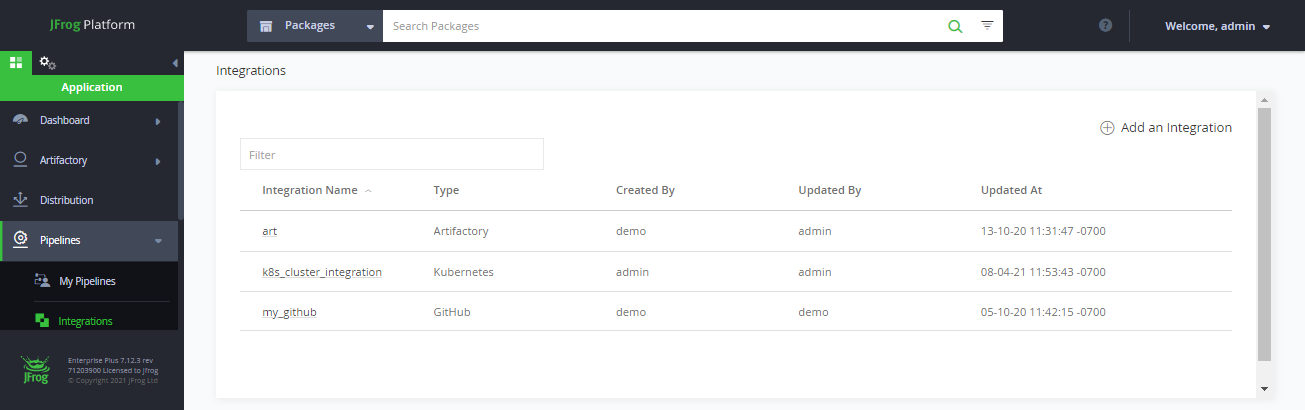

Integrations

For your pipeline to run, it will need to be able to connect to other tools and services, such as your version control system, cloud storage, Kubernetes, team messaging, and of course Artifactory. There’s no need to install and manage plugins, or to resolve their conflicts; Pipelines comes with out-of-the-box integrations for the tools you’re likely to use most, so connecting to those services is a snap.

You add integrations to Pipelines through the JFrog Platform UI. Pipelines integrations keep your secrets safe by storing your credentials securely in an encrypted vault, and admins can limit whose pipelines can access those services through JFrog Platform permissions management.

For this example, we only need integrations to connect to:

- Artifactory through our permissions-limited user account

- The GitHub user account where our code and pipeline definitions are stored

- The Kubernetes cluster where our application will be deployed

Resources Declarations

Pipelines Resources represent the “objects” in your pipeline that are the inputs and outputs of your steps. Internally to Pipelines, they are data records that contain information about the resource needed for a step in a pipeline to execute and can also be used to store information to share with other steps.

A resource can be used to trigger the execution of a step when that resource has changed. For example: on a commit to a source repo, or the production of build-info by a successful build.

Our resources are declared through the Pipelines DSL, and can be in the same file with our pipeline declaration. The resources we need for our pipeline are:

- The source code repository in GitHub

- The Docker image we will build and push to Artifactory

- The build-info metadata resulting from the build

- The Helm chart stored in Artifactory that we will use to deploy the built image to K8s

resources:

- name: petclinic_repository

type: GitRepo

configuration:

gitProvider: my_github

path: jfrogtw/spring-petclinic-ci-cd-k8s-example

branches:

include: master

- name: petclinic_build_info

type: BuildInfo

configuration:

sourceArtifactory: art

buildName: build_and_dockerize_spring_pet_clinic

buildNumber: 1

- name: petclinic_image

type: Image

configuration:

registry: art

sourceRepository: docker_local

imageName: kaloula.jfrog.io/docker-local/pet-clinic

imageTag: 1.0.0

autoPull: true

- name: spring_helm_chart_resource

type: HelmChart

configuration:

sourceArtifactory: art

repository: helm

chart: spring-petclinic-ci-cd-k8s-chart

version: '1.0.0'

Pipeline Declaration

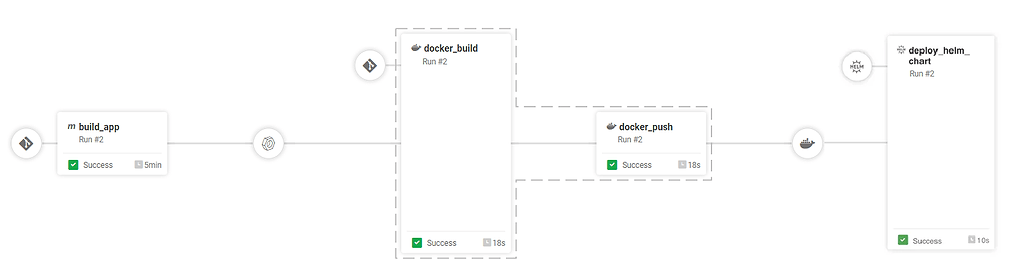

A pipeline is composed of a sequence of Pipelines steps that act on resources.

Our example pipeline in JFrog Pipelines uses only 4 native steps that enable us to perform common CI/CD actions out-of-the-box without having to write our own command-line scripts.

| Step Action | Native Step Type | Description |

| Build Maven Project | MvnBuild | Builds the maven project according to a pom.xml configuration file |

| Build Docker Image | DockerBuild | Builds and tags the Docker image using the Docker CLI |

| Push Image to Artifactory with Build Info |

DockerPush | Pushes the completed Docker image and build-info to a Docker registry in Artifactory |

| Deploy Chart | HelmDeploy | Deploy the application using the Helm chart in Artifactory |

With our integrations added and resources declared, our example pipeline is ready to run:

pipelines:

- name: build_and_dockerize_spring_pet_clinic

steps:

- name: build_app

type: MvnBuild

configuration:

integrations:

- name: art

sourceLocation: .

autoPublishBuildInfo: true

mvnCommand: clean install -s settings.xml

configFileLocation: .

configFileName: maven.yaml

inputResources:

- name: petclinic_repository

outputResources:

- name: petclinic_build_info

- name: docker_build

type: DockerBuild

configuration:

affinityGroup: dbp_group

dockerFileLocation: .

dockerFileName: Dockerfile

dockerImageName: kaloula.jfrog.io/docker-local/pet-clinic

dockerImageTag: 1.0.${run_number}

inputResources:

- name: petclinic_repository

- name: petclinic_build_info

integrations:

- name: art

- name: docker_push

type: DockerPush

configuration:

inputSteps:

- name: docker_build

affinityGroup: dbp_group

targetRepository: docker-local

autoPublishBuildInfo: true

integrations:

- name: art

outputResources:

- name: petclinic_image

- name: deploy_helm_chart

type: HelmDeploy

configuration:

integrations:

- name: k8s_cluster_integration

helmVersion: 3

lint: true

flags: --kube-context=gke_dev_us-west1-a_artifactory --set=image.tag=1.0.${run_number}

inputResources:

- name: petclinic_image

- name: spring_helm_chart_resource

releaseName: spring-petclinic-ci-cd-k8s-example

valueFilePaths:

- values.yaml

Here are some important details to help you understand it:

What Makes It Run

Each step in JFrog Pipelines can be configured to trigger execution on an event, or on completion of a prior step in the sequence. Here’s how our example works:

- The first step of our pipeline will trigger on any new commit to the GitHub

petclinic_repository. - The steps to build and push a

petclinic_imageDocker image will run when the Maven project is successfully built and produces a newpetclinic_build_info. - The successful production of

petclinic_imageto the Docker registry in Artifactory triggers its final deployment to Kubernetes.

Where Will it Run?

The entire Jenkins version ran in a single VM agent, with the agent any directive specifying whichever VM is available from the pool.

In Pipelines, each step in the pipeline executes in a runtime container provisioned to a build node VM from the pool. If required, any step can specify its own specific runtime image that has the environment it needs, or a Pipelines DSL can specify a default runtime for all steps. But you don’t have to specify any — our example pipeline doesn’t, so all steps execute in the default runtime set by the administrator.

Where Did the Rest Go?

Perhaps the biggest difference from the Jenkins pipeline is what is absent from the Pipelines DSL:

- To start, there’s no need to clone the source repo.

- There’s no need to perform any configuration of Artifactory — as a naturally integrated part of the JFrog Platform, Pipelines can get to work building our app right way.

- There’s no need for CLI commands to perform commonplace build actions – declarative native steps command Maven, Artifactory, Docker, and Helm for you. Developers can always use a generic step to issue command-line instructions, just as they would in a Jenkins stage. But with Pipelines’ native steps, you don’t have to — you can specify only what you want done, not how to get it done. If you need your step to issue additional commands for configuration or cleanup, you can, but oftentime they won’t be needed.

- With Pipelines, publishing build-info from your build no longer has to be a separate action to remember, but can be an inherent part of your build operation. To maintain this DevOps best practice, all you have to do is say, “Yes, please” by setting

autoPublishBuildInfototrue. - There’s no need to configure K8s from your pipeline. The kubeconfig is part of your Kubernetes Pipelines integration, where it’s encrypted and kept secret along with your K8s credentials.

- There’s also no need to install or configure Helm — it’s already part of the runtime container. The standard runtime images shipped with Pipelines include the most commonly used services (e.g., Docker, Helm, JFrog CLI) so that steps can just expect them to be installed and at the ready.

Dive Deeper

Want to explore JFrog Pipelines some more?

- Review the Pipelines Quickstarts for some further examples.

- Dive into the Pipelines Developer Guide to learn about the full capabilities of the JFrog Pipelines DSL.

You’ll find many other reasons to take a closer look at Pipelines, including abilities to:

- Extend pipelines with your own custom steps and resources

- Perform matrix testing

- Create pipelines from templates

Don’t want to convert your existing Jenkins workflows to Pipelines? Learn how you can interoperate between Jenkins and Pipelines to preserve your existing CI investment and/or facilitate eventual migration.

Better yet, get started with Pipelines in the cloud for free!